Hi, I’m Jérémie 👋

I’m a PhD candidate in ML security and privacy at École Polytechnique Paris, supervised by Prof. Sonia Vanier and Prof. Davide Buscaldi. I also collaborate with Crédit Agricole as part of the “Responsible and Trustworthy AI” partnership with École Polytechnique.

My research focuses on security and privacy in machine learning, with a particular interest in the robustness of Large Language Models against privacy attacks. I have developed new adversarial attacks to reconstruct training data from multimodal models, designed an auditing method to predict LLM memorization in classification settings, and proposed a new taxonomy of memorization that aligns with attention mechanisms and enables fine-grained localization. I am currently working on new techniques for uncertainty quantification in LLM outputs, and I published a new benchmark on that topic.

I grew up near Aix-en-Provence, France, and followed the French engineering curriculum, including two years of “classe préparatoire” at Sainte Geneviève in Versailles. I then joined the engineering program at École Polytechnique, graduating in 2022. I also graduated from ENS Paris-Saclay “MVA” Master in 2023, specializing in Mathematics, Vision, and Learning. I started my PhD at École Polytechnique Paris in 2023.

🔥 News

- 2025.11: 🥳 Our paper was accepted for an oral presentation at AAAI-26! See our preprint here!

- 2025.07: 🥳 Our paper was accepted to ECAI 2025! See our preprint here!

- 2025.04: 👨🏫 I was at SaTML 2026 conference in Copengagen. Very interesting papers and presentations!

- 2024.08: 🗣️ I was at Usenix Security Symposium in Philadelphie to present our paper Reconstructing Training Data From Document Understanding Models

- 2024.03: 🍾 The “Responsible and Trustworthy AI” between Crédit Agricole and École Polytechnique is signed! Check out this article here.

🔈 Invited talks

- 2025.11.24: AMIAD, Paris. AI Safety at Scale. Jérémie Dentan.

- 2025.10.23: PIAF, Palaiseau. Scaling Trustworthiness in the Era of Large Language Models. Jérémie Dentan. [Website of the association]

- 2025.01.28: FIIA 2025, Paris. Measuring and understanding ivacy risks in Language Models. Sonia Vanier and Jérémie Dentan. [Slides] [LinkedIn Post]

- 2024.10.02: Google Responsible AI Summit, Paris. Towards security and privacy in document understanding models. Sonia Vanier and Jérémie Dentan. [Slides] [LinkedIn Post]

- 2024.10.02: Google Responsible AI Summit, Paris. Trust and Security in AI. Sonia Vanier and Jérémie Dentan. [Slides] [LinkedIn Post]

📝 Publications

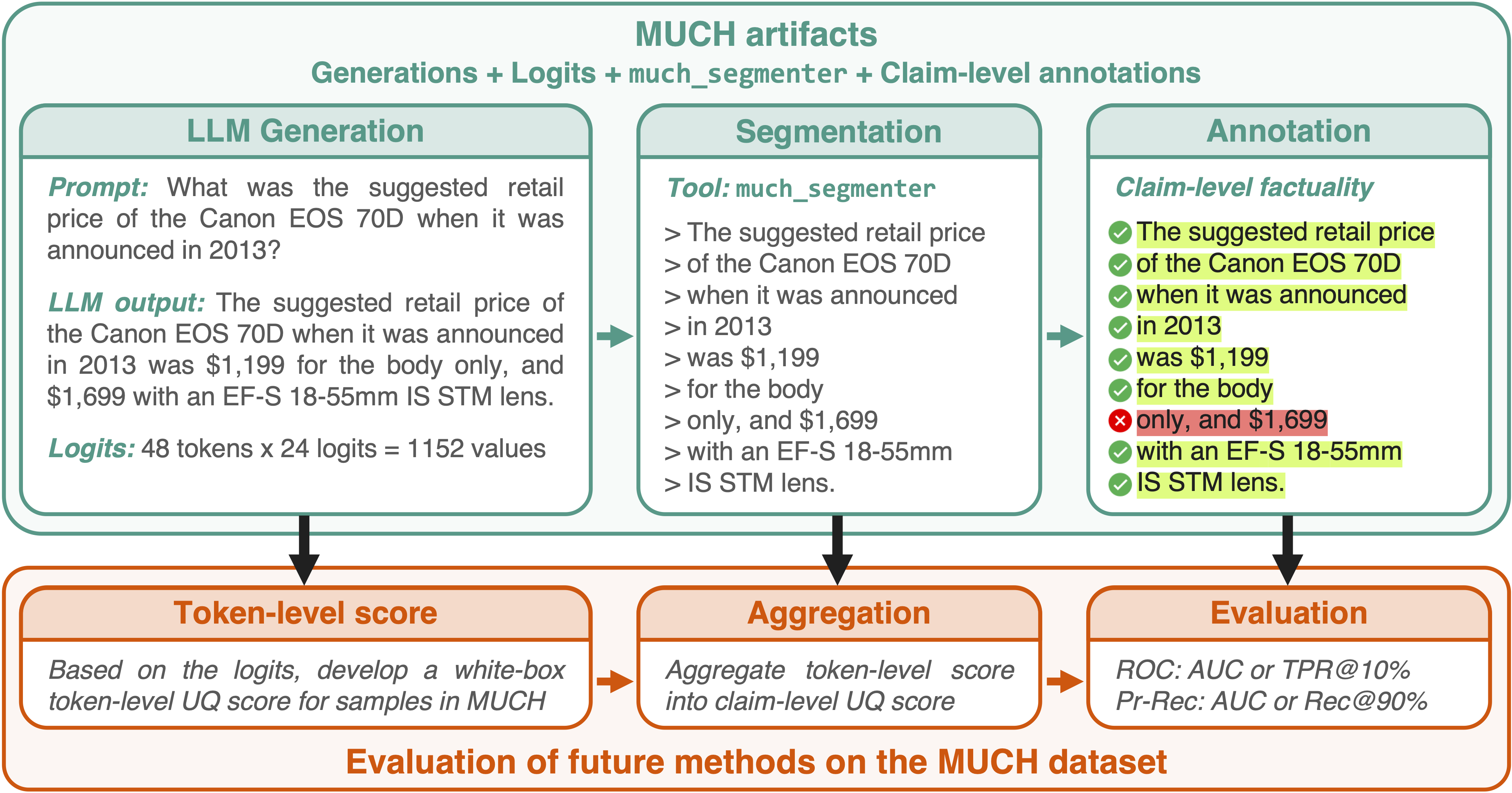

MUCH: A Multilingual Claim Hallucination Benchmark

ArXiv Preprint - November 2025

Jérémie Dentan, Alexi Canesse, Davide Buscaldi, Aymen Shabou, Sonia Vanier

- A new claim-level benchmark for uncertainty quantification for LLM

- Design to mimic production monitoring of LLMs’ outputs via a fast claim segmenter

- Open-source all generation scripts and logits to facilitate future research

- [Hugging Face]

- [GitHub]

- [PyPI]

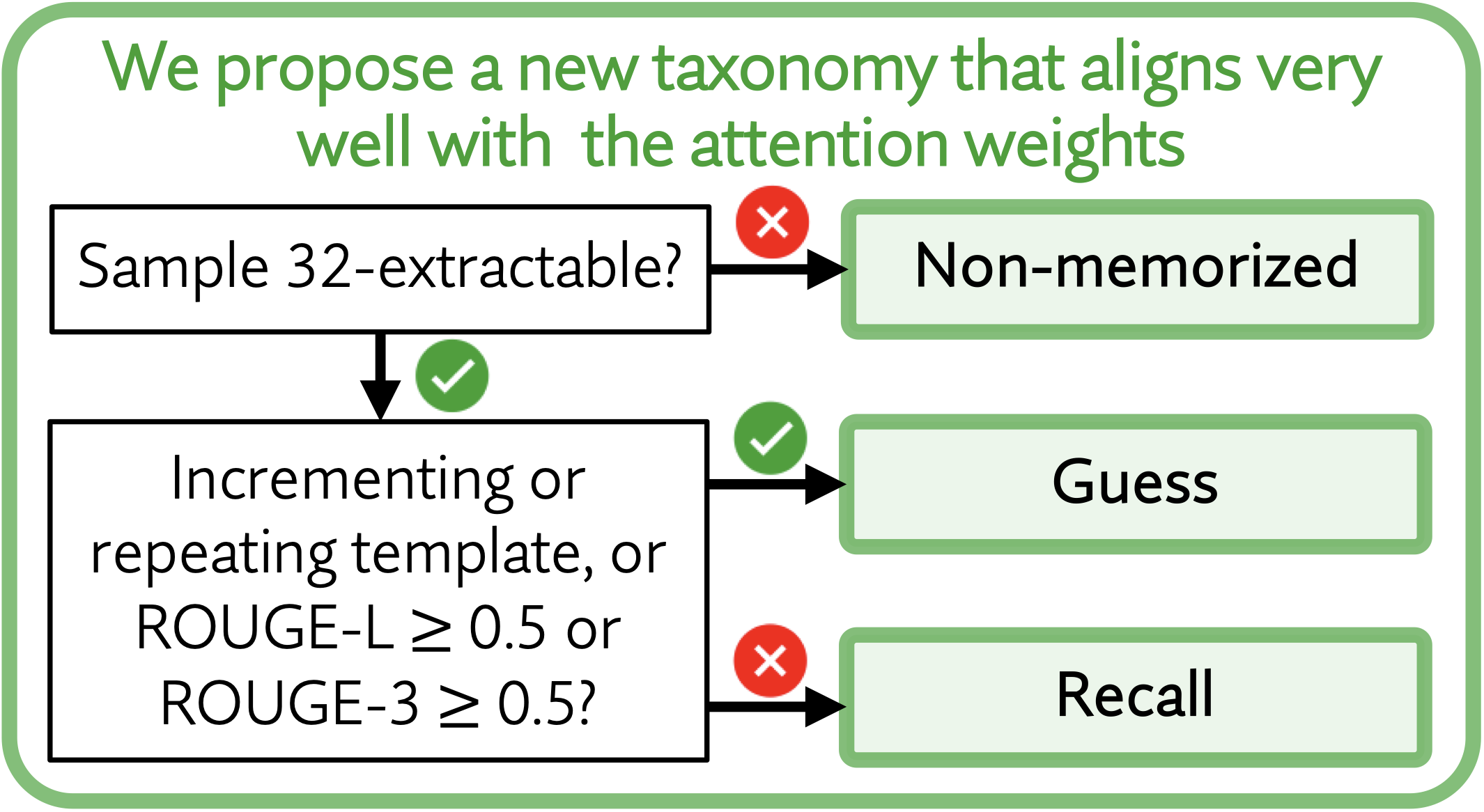

Guess or Recall? Training CNNs to Classify and Localize Memorization in LLMs

Association for the Advancement of Artificial Intelligence (AAAI) - January 2026

Jérémie Dentan, Davide Buscaldi, Sonia Vanier

- Analyzing attention weights with CNNs to identify patterns in verbatim-memorized samples

- Introducing a data-driven taxonomy of memorized samples

- Localizing the attention regions involved in each form of memorization

- [Code]

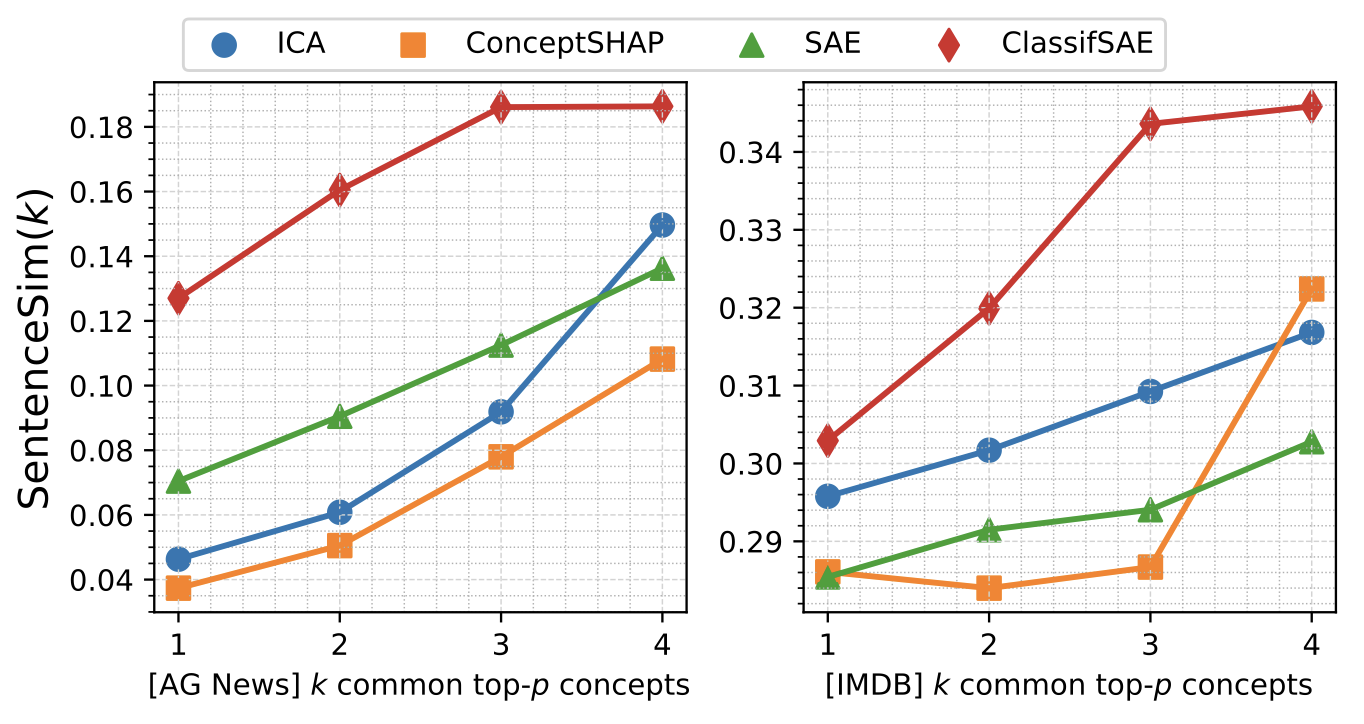

ArXiv Preprint - June 2025

Mathis Le Bail, Jérémie Dentan, Davide Buscaldi, Sonia Vanier

- A new SAE-based method to extract interpretable concepts from LLMs

- Applicable to any LLM-based classification scenario

- Demonstrates strong empirical results

- [Code]

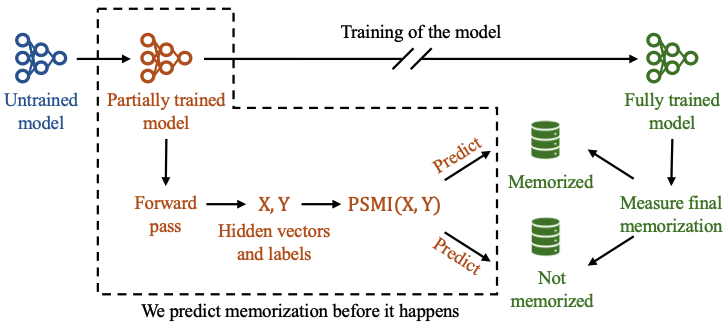

Predicting memorization within Large Language Models fine-tuned for classification

European Conference on Artificial Intelligence (ECAI) - October 2025

Jérémie Dentan, Davide Buscaldi, Aymen Shabou, Sonia Vanier

- An auditing tool for practitioners to evaluate their models and predict vulnerable samples before they are memorized

- Theoretical justification and strong empirical results

- Easy to use with a low computational budget

- [Code]

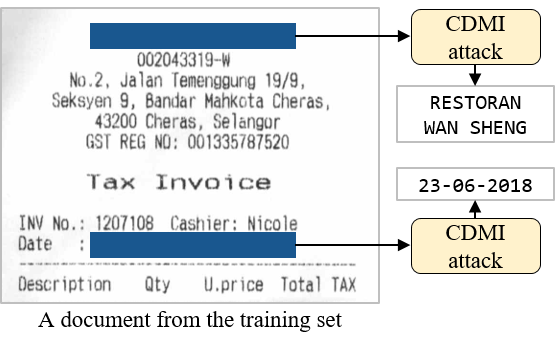

Reconstructing Training Data From Document Understanding Models

Usenix Security Symposium - August 2024

Jérémie Dentan, Arnaud Paran, Aymen Shabou

- Presents the first reconstruction attacks against document understanding models

- Strong empirical results, with up to 4.1% of perfect reconstructions

- Focus on the impact of multimodality on the performance of the attack

- [Slides] [Presentation Video]

- Using Error Level Analysis to remove Underspecification Jérémie Dentan. 2023.

- Towards a reliable detection of forgeries based on demosaicing Jérémie Dentan. 2023.

- Cellular Component Ontology Prediction Jérémie Dentan, Abdellah El Mrini, Meryem Jaaidan. 2023.

📖 Educations

- 2022-2023, Master of Science in Mathematics, Vision, Learning (MVA). ENS Paris-Saclay, Gif-sur-Yvette, France.

- 2019-2022, Master of Engineering in Applied Mathematics and Computer Science. École Polytechnique, Palaiseau, France.

- 2017-2019, Classe préparatoire MPSI/MP*. Lycée Privé Ste Geneviève, Versailles, France.

💻 Internships

- 2023.04 - 2023.09, Research Assistant. Crédit Agricole DataLab Groupe, Montrouge, France.

- 2022.04 - 2022.09, Research Assistant. Oracle Labs, Zurich, Switzerland.

- 2021.06 - 2021.08, Business Analyst. BearingPoint, Paris, France.

- 2019.09 - 2020.03, Deputy Project Manager for Civil Security. French Embassy, Antananarivo, Madagascar.